Yesterday it was cold enough on campus that we left Piper and Nika at home. They are both used to getting a walk around 10 AM, so I drove home, had lunch and let them out. When I got back to my office I remembered I'd left my laptop open (normally I put it to sleep when I'm at work or during the night). A quick Internet search and I found a command-line application that lets you take pictures using the camera that is built-in on new MacBook and MacBook Pro computers.

I used ssh to get to the laptop from work, downloaded the application, ran it, and then copied the image back using scp. I expected to either see nothing but the cushion of the couch, or maybe our cat Ivan sitting on the back of the couch watching birds.

Instead, it's Piper sleeping on the couch! She's not normally allowed up there, and whenever we've left her home, she's sleeping on her bed on the floor when we arrive. She's a sneaky one. A few minutes later I tried again, snapping the image of her actually standing on the couch looking out the window. When I got home later, I discovered we had a visit from some prosetylizing christians and that's why she was looking out there.

I remember when I moved to California being surprised that leaves and grass clippings were "cleared" using what we called leaf blowers. If you happen to live in an enlightened area where people still clean up their leaves with a rake or a mulching lawnmower, a leaf blower is a two cycle engine that blows a high volume of air out a wand that the operator uses to move leaves around. They're incredibly noisy (50 feet away they're about 100 times louder than World Heath Organization recommendations for outdoor sound levels), and cause an unbelievable amount of air pollution (up to seventeen times the amount a modern automobile produces).1

Recently, I had the same surprise on campus when Facilities Services at UAF started equipping their sidewalk cleaners with the same devices, except now they're using them to blow snow. I don't have the data to perform an economic analysis of this decision, but I guarantee that when you add in the externalities of noise and air pollution, engine maintenance, the damage done to cars when they blow gravel into them, and the impact of burning fossil fuels on the global climate, a shovel starts looking pretty cheap.

They also don't do a very good job because they can't remove snow that's been packed down at all. Check out all the footprints in the image. I can't help but wonder, once again, if some problems are better solved with less modern technology.

1These figures come from a variety of sources, referenced on this site. It's a biased site, but I trust the cited sources.

<rant>

image from psd

In my job as a systems administrator, spam is one of those things I accept as fact, but have to deal with as best I can so my users can actually get work done. I came across this article on Slashdot today, and even though there's absolutely nothing revelatory in this article, I think people fail to appreciate where spam comes from. It's not evil spammers sending you junk mail; spam comes from computers running Microsoft Windows that have been infected with something. If you don't like spam, stop sending Microsoft money for their software. Every time you buy a Microsoft product, you're supporting all the network effects of their software. The same network effects that make sharing a Word document with other Microsoft Office users easy, also result in more infections, more spam, more wasted time and money.

<rant />

I have a friend whose house1 burned down a few years ago. He and I are both baseball fans and I'd lent him my copy of Robert K. Adair's The Physics of Baseball before the fire. I never saw the book again, and wasn't going to bother him with such an inconsequential item when he was trying to replace all the really important things he lost. I've since replaced it with a newer edition and he's now living in his new house.

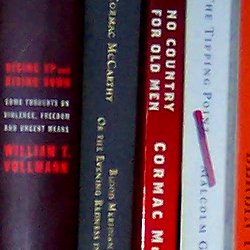

Since that happened, I've worried about what I'd be able to replace if we had a disaster, mostly because I wouldn't be able to remember everything. Normally, I suppose, you'd make a long list of the stuff you own, file it with your insurance company or put it someplace safe. But there's a much faster way: just take a digital picture of everything, burn it all to a CD or DVD and file that away. We've got a reasonably inexpensive 3.1 Megapixel camera, and I just took a couple photos of one of my bookshelves. The book titles were too hard to resolve when I took the entire bookshelf with one shot, but you can easily read everything at full resolution when only a couple shelves are fit into the frame (that's what the image above is a sample of).

A few minutes with a camera and I'll have a nice record of all of it.

1 Google as an English language expert: I couldn't remember whether this phrase was "who's house" or "whose house". A google search for the first phrase yielded 76.7 thousand hits. 'whose house' is almost ten times more popular (737 thousand), so I figure that must be correct. To confirm this, I repeated the search, adding 'site:http://www.nytimes.com' to the search string. Two hundred and ninety-five hits for 'whose house' at the New York Times, zero for "who's house". Case closed.

Also: the new version of Firefox will spell check text entries like the big textarea I'm typing this post into. Goes a long way toward eliminating spelling mistakes in blog posts. Check it out!

Yesterday when I was walking Piper and Nika on campus the tips of my ears got cold. I have a wool cap I wear when it's really cold, but I had my Pendleton hat on instead. It seems like whenever I'm wearing it, someone comments on it, and it does keep my head warm. But what to do about my ears?

A couple winters ago we bought some wool yarn, circular needles, and a few knitting books with the intention of knitting stuff; a hat to start because it seemed easier than socks and quicker than something like a sweater. Browsing around the knitting store, looking at all the cool yarn, and thinking about actually making something to wear from scratch got me all excited about the project.

Most of the time this sort of enthusiasm carries me through the first three-quarters of a project, and the desire to finally get the damned thing done gets me through the rest. This time, though, I started out overly ambitious, and my interest petered out after nine or ten rows.

Today I pulled it off the shelf in the living room and got back to work. I figure about ten more rows and I'll have a very simple knit ring I can pull over my ears, but below my stylin' hat, to keep my ears warm. And better than that, maybe the satisfaction of finishing my first knitting project will tempt me into starting and finishing a real project, like the hat I'd been thinking of a couple years ago.

There's a fire burning in the wood stove, Spoon's Kill the Moonlight on the stereo, and I'm ready to roll.

Update, Wed, 08 Nov 2006: I finished it this evening, and you can see what it looks like under my hat on the image to the left. It fits just about perfectly, but it's not really all that stretchy. I think that's because the last half of it was done entirely with knit stitches. I started with some sort of knit / purl ribbing, but one of the reasons I put the project down for two years was that it was too much for me to handle at the same time I was learning to knit.